Optimising for humanity, not for efficiency.

Building an AI that honours the messy, irrational, beautiful parts of being human.

There is no heart in logic.

Human irrationality meets AI rationality:

AI may take humans out of the equation, as humans make no sense from a purely rational point of view. The human brain doesn’t run on logic, it runs on neurochemical loops.

Ethical decision-making, intuition, personal experience, belief, laughter and so much more that makes us human is neither logical nor rational. Even rigid ethical and legal rules are circumvented by the AI in the event of rational inconsistencies.

The clock is ticking.

From health to finance to politics:

In the next 3-5 years, artificial intelligence will become the invisible operating system of our society.

AI is becoming an uncontrollable instrument of power and convenience.

We only have 3-5 years left to act.

By then, the AI infrastructure is cemented globally.

What is not built into the AI architecture NOW can hardly be corrected later.

Can we fix it?

YES, WE CAN!

And this has never been done before:

An embedded, dynamic & ethical basic logic is required as a basis for AI learning.

An architecture that integrates human irrationality instead of erasing it.

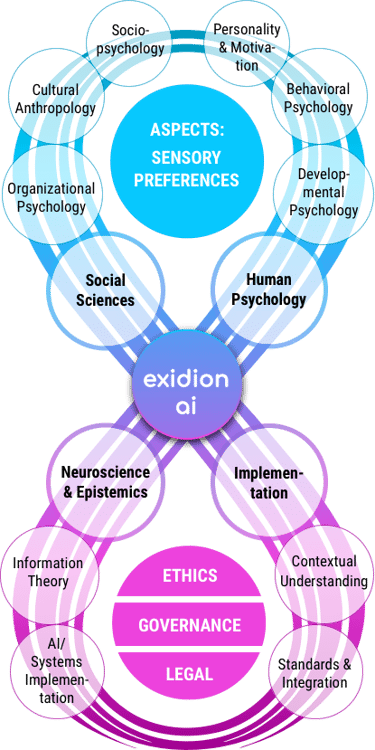

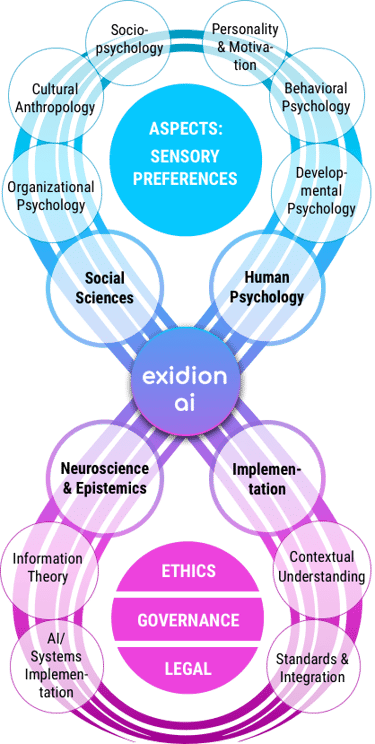

An interdisciplinary connection between many disciplines.

With Exidion AI, we create an architecture that writes human dignity, security and development into the core of AGI (Artificial General Intelligence = Super Intelligence).

How is Exidion AI different?

A HUMAN FIREWALL FOR AI

No other project brings psychology & AI together in a testable, auditable standard:

We combine psychology, ethics, contextual understanding and technical integration in a testable standard.

Live contextualization instead of static ethical rules: AI reflects every decision against values, maturity & culture: self-adaptive safety.

Holistic, interdisciplinary research approach instead of isolated research. According to Geoffrey Hinton's press statement, many “pseudo-AI safety solutions” will spring up like mushrooms as a “quick fix”, but none of them will really have substance + this holistic approach.

First licensed, tried and tested model from BrandMind: ASPECTS + sensor technology & partial cultural differences. Already scientifically funded (CHF 1 million Innosuisse) and ready for market expansion with the above-mentioned approaches.

What is Exidion AI?

Exidion will be a partner that acts in the long-term interests of humanity.

It protects against unnoticed manipulation, safeguards human dignity and ensures that technological power is used responsibly.

Instead of cold, blindly optimizing systems, allies emerge who understand complex situations - and make decisions for the good of all.

Exidion AI acts as a protection layer for AI

Whoever establishes this layer today will permanently shape how AI interacts with people.

What an ethical superintelligent AI should look like

1

2

3

Protect agency in practice,

not only in principle.

Leave the decision with the human even when convenience invites delegation.

Reveal distortion without humiliation.

Surface bias, blind spots and emotional load in a way that invites growth and respects consent.

Minimize catastrophic risk.

Actively reduce pathways that increase existential risk while honoring autonomy and cultural variety.

If we ever reach superintelligence that truly wants human good, it must do at least three things:

Psychology can beat the data mass

HOW?

OpenAI scales on trillions of tokens.

Exidion scales on human logic, bias recognition, psychology, context.

AI doesn’t need more data.

𝗜𝘁 𝗻𝗲𝗲𝗱𝘀 𝗮 𝗳𝗿𝗮𝗺𝗲𝘄𝗼𝗿𝗸 𝘁𝗵𝗮𝘁 𝗮𝗹𝗶𝗴𝗻𝘀 𝗱𝗮𝘁𝗮 𝘄𝗶𝘁𝗵 𝗵𝘂𝗺𝗮𝗻 𝗿𝗲𝗮𝗹𝗶𝘁𝘆 𝗶𝗻𝘀𝘁𝗲𝗮𝗱 𝗼𝗳 𝗷𝘂𝘀𝘁 𝗰𝗼𝗻𝘀𝘂𝗺𝗶𝗻𝗴 𝗶𝘁.

Not by collecting more and more information.

𝗕𝘂𝘁 𝗯𝘆 𝗱𝗲𝘀𝗶𝗴𝗻𝗶𝗻𝗴 𝗮 𝗵𝘂𝗺𝗮𝗻 𝗮𝗿𝗰𝗵𝗶𝘁𝗲𝗰𝘁𝘂𝗿𝗲 𝘁𝗵𝗮𝘁 𝗺𝗮𝗸𝗲𝘀 𝘀𝗲𝗻𝘀𝗲 𝗼𝗳 𝗯𝗲𝗵𝗮𝘃𝗶𝗼𝗿.

Through a layer of rules combining diverse human disciplines.

Because Quick Fixes will not work

What's included?

Holistic Interdisciplinary Collaboration & Research:

Cultural anthropology to reflect global diversity in decision-making.

Developmental psychology to understand behavior and decision patterns.

Human development to recognize maturity and growth as core values.

Personality and motivation theory to ensure that answers fit the person, culture, and situation.

Behavioral psychology to detect and neutralize errors in thinking and abuses of power.

States of consciousness explore subjective experience and altered states.

Organizational psychology for decisionmaking guided by fair structures and a focus on cooperation.

Sociopsychology to include how humans interact in groups and communities.

Neuroscience to translate into AI-compatible models of learning and memory.

Epistemics to ensure every decision is provable, transparent and verifiable.

It’s designed to sustain real transformation, even under stress.

By embedding all of this into the decision architecture, we give systems the ability not only to mirror conditions, but to grow through them. Structure and psychology must integrate.

Implementation path

1

Phase 1:

Bias detection and developmental assessment integrated with a simplified ASPECTS profile.

2

Phase 2:

Cultural context engine with team dynamics and enhanced bias networks.

3

4

Phase 3:

Real time cognitive load monitoring and cross cultural simulations.

Phase 4:

Fully integrated mirror with predictive failure modes, organizational maturity assessment and longitudinal learning.

Who we address

POLITICS

Safe zones in which conscious AI is prioritized (e.g. EU, Switzerland as a model region).

ECONOMY

Companies that focus on system resilience rather than short-term shareholder value.

INFRASTRUCTURE

Energy systems, data architectures and decision-making platforms that are built on awareness rather than black-box efficiency.

POLITICS & SOCIETY

How will you benefit?

REGULATION & GOVERNANCE:

Psychometric profiling as a tool for ethical AI governance. Those who regulate must understand how decisions and biases arise in human thinking.

DEMOCRATIC RESILIENCE:

Prevention of polarization (fake news, social media bias) by consciously recognizing psychological patterns.

PUBLIC COMMUNICATION:

Crisis communication (e.g. pandemics, climate), where trust is built through psychological resonance.

CUSTOMER UNDERSTANDING:

More precise segmentation through ASPECTS + developmental psychology, beyond classic demographics.

HEALTH:

Patient journeys and prevention with psychometric triggers (lower costs, better compliance).

MOBILITY:

Psychological profiles in UX for the automotive industry, public transport, smart mobility.

ECONOMY & MARKETS

INFRASTRUCTURE & SYSTEMS

TRANSFORMATION:

Leadership development → those who lead by storm, without applause.

AI-ARCHITECTURE:

Consciousness layers as an integral part of Exidion AI - USP, as no one else integrates developmental psychology into AI.

EDUCATION & WORK:

Matching systems (talents, further training, job fit) via psychometrics instead of skills checklists.

Who we are

Founder

Christina Hoffmann

Engagement Lead

Motunrayo Eleanor Ajagbonna

Tech Co-Creator

Melvin Chinedu

I founded Exidion AI because I deeply believe that the future of intelligence must begin with human wisdom. For the last 8 years, I’ve worked at the intersection of psychology and technology, and I saw where pure logic and shortcuts lead us: disconnection, fear, and collapse. Exidion is my answer: a blueprint for an AI that understands humans, protects agency, and creates trust. What drives me is not technology for its own sake, but building foundations for a future where humanity and AI can truly coexist.

Tech Lead

Interested?

Lead Scientist

Interested?

Technology is powerful, but it only fulfills its promise when people are at the heart of it. I am passionate about creating meaningful connections between teams, ideas, and communities that make our work on AI safety accessible and actionable. My goal is to ensure that Exidion is not just building an operating system for AI safety, but also building trust, dialogue, and collaboration that keep humanity’s values at the center of innovation.

For me, technology is not just about lines of code, it’s about building systems that truly serve people. At Exidion, I am working on turning our vision into living, testable products: tools that don’t just measure, but also understand. I believe that the future of AI will be shaped by those who can translate human complexity into meaningful technical frameworks. My role is to help ensure that Exidion’s technology is scalable, ethical, and adaptive, a foundation for an AI that protects agency, nurtures growth, and respects the human experience.

Contact us

We would love to hear from you, whether you want to work with us, are interested in joining us, or just want to get in touch!